Blocking

Operating Systems simulate parallel systems by time-sharing the CPU between processes.

Each process appears to have its own cpu, its own memory space and its own stack, but these are just slices of the main CPU and the main memory.

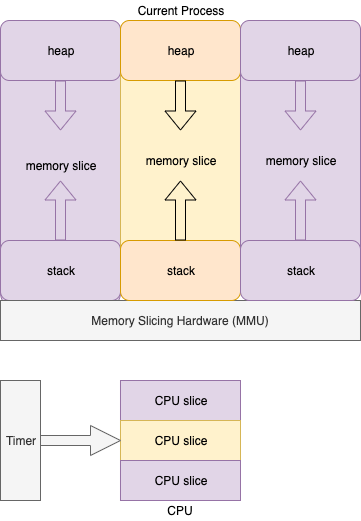

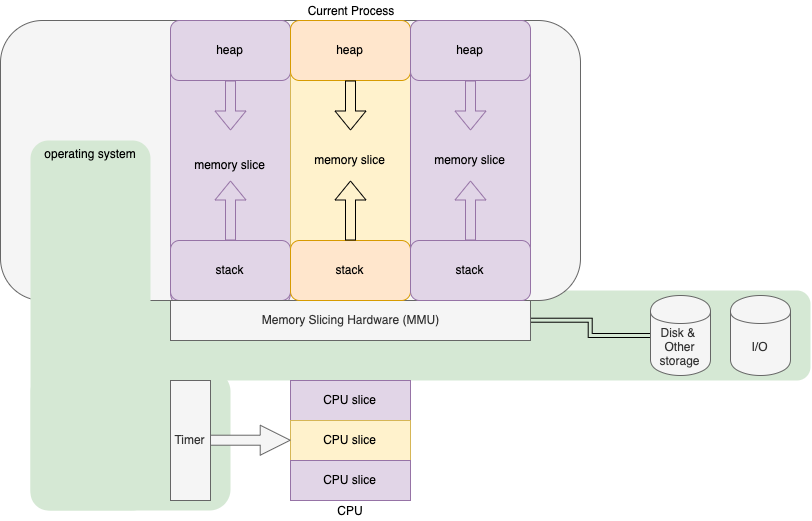

I have tried to diagram the situation in Fig. 1 and Fig. 2.

Fig. 1 Stylized view of Multi-Processing

Fig. 2 Stylized view of Multi-Processing Including Time-Sharing Operating System

A process "blocks" when:

- it's allotted time is up

- it tries to perform some off-cpu operation (e.g. I/O) allowing the CPU to be used by other processes.

The operating runs some software to switch to a new process (e.g. reload MMU registers, change the timer setting, grab pages from disk, etc.).

This is also called "context switching".

Context switching and hardware support are used to isolate processes from one another.

Time-sharing

https://en.wikipedia.org/wiki/Time-sharing

Yield

"Yield" is another term for blocking.

Loops / Recursion

Time-slicing a CPU is necessary only because PLs (Programming Languages) allow programs to contain long-running loops and deep recursion.

Time-slicing would not be necessary if PLs prohibited the use of long-running loops and deep recursion. [N.B. This would be possible, if compilers compiled loop-ends into "yields" with a feedback-message-send that would signal the need for another pass through the loop].

Loops and recursion are the exception not the rule.

We incur accidental complexity because we encourage the use of loops and recursion.

Loops / recursion makes sense in a language that is meant to be used inside a network node - iPL (inner PL).

Loops / recursion do not make sense in a language that is meant to be used to describe a network of nodes - oPL (outer PL).

1950's

Time-sharing was invented in the 1950's.

At that time, CPUs were very expensive.

At that time, memory was very expensive.

Time-sharing is an optimization that allows for the use of one computer to simulate many computers.

2020's

CPUs are no longer expensive

Memory is no longer expensive.

Yet, we continue to use the time-sharing optimization.

Distributed Programming

In distributed programming, we use CPUs like candy - each app gets its own CPU and memory.

A piece of software, in distributed programming, runs at its own speed and never blocks.

Bit Spinning

In a distributed environment, where CPUs are like candy, a piece of software waits for input by bit-spinning[1].

In a time-shared environment, bit-spinning is discouraged because it wastes CPU power that could be used by other processes.

Efficiency

Adding the overhead of using an Operating System (which is just a library) adds overhead and degrades the efficiency of the software.

The use of an Operating System improves efficiency of a system only if most apps (processes) access much-slower I/O devices that would require the use of bit-spinning.

Calculator software (one-in-one-out, functional) would be more efficient without the addition of an operating system.

IoT devices would be more efficient (aka "cheaper") without the use of operating systems.

[IMO, what we really want is to be able to plug together software components to build the least amount of software-plus-operating-system possible to solve any one problem. With current programming languages, this is not easy to do, because they are, mostly, iPLs and not oPLs that encourage structured design of distributed computing. (See Loops / Recursion for the definition of iPLs and oPLs).]

Blockchain and Sybil Attacks

Blockchain developers mention Sybil Attacks.

Sybil Attacks are the idea of using one CPU to simulate many computers, i.e to give one computer multiple personalities like the Sybil character in the movie.[2]

Slicing a computer up into multiple personalities makes it look like there are more participants on a blockchain than there really are.

If the multiple personalities collude to sway votes about who should receive $'s, then this is called a Sybil attack.

The counter-measure to such Sybil attacks is to insist that all participants solve a puzzle before casting a vote. The puzzle is chosen to be so onerous that a single computer must work on it full time. If a computer is sliced up into multiple personalities, the probability of getting the right answer to the puzzle in any of the personalities is very low and only single-personality computers can solve the puzzle and can get to vote.

[1] Bit-spinning is a "technical" term for spinning in a tight loop waiting for input.

[2] https://en.wikipedia.org/wiki/Sybil_(1976_film)